"Power to the data," says Delaware's State Auditor

Delaware State Auditor Kathy McGuiness is leading an initiative to help states investigate their COVID-19 data collection and reporting. I spoke to her about what she hopes to find.

Welcome back to the COVID-19 Data Dispatch, where I pore over data rubrics like I was that annoying kid in kindergarten who asked for more homework. (I was.)

This week, I’m bringing you an exclusive interview with Delaware State Auditor Kathy McGuiness. Auditor McGuiness and I spoke about an initiative she started to coordinate investigations into COVID-19 data collection and reporting at the state level. Plus: testing in Texas, how to advocate for better race data, and (of course) an update on the HHS.

This is a baby newsletter, so I would appreciate anything you can do to help get the word out. If you were forwarded this email, you can subscribe here:

Delaware leads the charge on data integrity

Five State Auditors were on the task force to develop a framework for evaluating state COVID-19 data. Screenshot of the auditing template provided by Delaware State Auditor Kathy McGuiness.

This past Monday, I had the pleasure of speaking to Delaware State Auditor Kathy McGuiness. Auditor McGuiness was elected to her position in 2018, and she hit the ground running by implementing new ways for Delaware residents to report fraud and keep track of how their taxpayer dollars were being spent.

Now, State Auditor McGuiness is focused on COVID-19. She spearheaded the creation of a standardized template that she and other state auditors will use to evaluate their states’ COVID-19 data collection and reporting. The template, created in collaboration with auditors from Florida, Mississippi, Ohio, and Pennsylvania, is a rubric which watchdog offices may use as a baseline in determining which datasets they examine and which questions they ask of state politicians and public health officials.

State Auditor McGuiness sent me a copy of this rubric; I’ll go over its strengths and drawbacks later in this issue. But first, here’s our conversation (lightly edited and condensed for clarity).

Betsy Ladyzhets: What is a State Auditor?

State Auditor Kathy McGuiness: We’re the ones looking for accountability and transparency, in regards to taxpayer dollars—to identify fraud, waste, and abuse.

BL: What does your job usually look like? Are you usually conducting investigations like this?

KM: We do investigations, we have the fraud hotline. We triage cases, we document, and then sometimes it leads to an investigation, sometimes it leads to a referral.

BL: What was the impetus for this investigation to look into COVID data collection? Was there a particular event that alerted you to the issue of COVID data across states?

KM: I noticed there were inconsistencies in it being reported nationwide. Everything from the case numbers, to tests being inaccurately counted. I noticed on several national calls that there were other states that were looking for similar bits of information… So who better to look at this information from an independent perspective than the other state fiscal watchdogs, right?

And every state has different rules. You have some [state auditors] that are chairing oversight committees, or other committees… You also have several states where they’re legislative auditors, they don’t necessarily do things like this. They might just take their audit plan every year from the direction of their general assembly. Half [the auditors] are elected in the country, and half are not. So I knew not everyone is going to be able to participate or even get the permission.

There’s a national group called NASACT—the National Association of State Auditors, Comptrollers and Treasurers. I went through their organization, pitched this idea of doing a national template, and asked them, let me do a survey first and see if there’s interest. By the next day, I had a couple of questions, they shot it out in a mass email. I had 29 states respond and 21 show interest immediately. That’s when I comprised the task force with Florida, Mississippi, Ohio, and Pennsylvania. A multi-state effort. Since then, other states have come on with interest, some wanting to be in the process, some not.

The whole idea was to focus on this collection, monitoring and reporting, for several reasons. Instead of several states maybe wasting their time and resources asking similar questions—we have one baseline where we’re all asking the same questions and trying to find out how our state has done. Down the line, we’ll be able to look at each other, and see how others did, and maybe there are areas of improvement where we can capture a process that we didn’t think of.

BL: You mentioned that in some states, the state auditor or a similar position to what you have, that position is elected, and in other places it’s appointed. Were there any auditors on the task force who faced pushback from other state leaders?

KM: No, not at all. I mean, there’s a couple [of auditors] who said, “I’d like to participate but I can’t, that’s not how my job works.” And I believe we had another legislative auditor say, “I still want to do this. I’m going to ask for permission, but I won’t be able to until my General Assembly goes back in session.” So, it’s all over the map.

BL: Yeah. I’m curious about Florida in particular because there’s been a lot of tension there. There’s Rebekah Jones, who was a whistleblower scientist who worked at Florida’s public health department, who said she was fired for refusing to manipulate their data.

KM: Wow.

BL: So I was very curious to see that state on the list.

KM: I didn’t know about this... I met their auditor, Sherrill Norman, at a NASACT conference. She came to mind especially, because when I put this together I was looking for diversity in the group. But also, who has a performance audit division? Not all states do... When you talk to me, we only have 27 employees, and when you talk to Pennsylvania and they have 400—they have more resources than I do, and I wanted to move this along in a timely manner. There was no politics in the reason [Florida] was picked, if that makes sense.

BL: Yeah, that makes sense. I guess I’ll have to watch and see if Florida publishes the results of their audit.

KM: They will. Or—they should. These [results] are going to be like any other report, or audit, or engagement, or examination, or inspection, or investigation. You make it public.

BL: Could you tell me a bit more about how the framework was developed, or what the collaboration was like between you and the other State Auditors who worked on this? Were there any specific topics that were focuses if you did research, or if you consulted experts, what was that process like?

KM: We had the experts—we had the lead performance audit teams. Our intent was, or is, that the states will be able to evaluate their own data. Then, if they so choose, compare with the other ones. But with these controls in place, this will paint a more accurate and conclusive picture, hopefully, with our efforts to combat this virus and control the spread.

BL: Are there particular goals in terms of say, looking at case data, or looking at deaths data, or anything else? Or are you broadly trying to figure out what will make it more accurate and more efficient?

KM: That’s exactly what we’re trying to do. That’s the whole intention here. Because things have been all over the map. And [the audit] just wants to accurately certify monitoring this data. It wants to be able to say, this is independent, we’re not political, we’re not driven by anything or anyone, and we’re all asking the same questions. Keeping in mind, not everyone’s going to be able to get to the answer.

BL: Right.

KM: Because sometimes [state auditors] have access to certain information, and sometimes they don’t.

BL: Is there an example of a piece of information where that differs, depending on how the state’s set up?

KM: Yes—one state might have a MOU, a memorandum of understanding, with an agency to be able to gather their data and look at it. I know New York[‘s auditor] can do that with the Medicaid data. They have constant access to it. There’s other [state auditors’ offices] that have to have agreements, there’s other states that don’t have access to certain data from their states. Every audit shop is different. [This framework] is just trying to give us something that we can all do together, and really be able to have a true comparison.

We’re gonna do the best we can, and obviously I’m going to report my data, put it out there. And say another state wants to compare against Delaware—well, they may not be able to on all points. It depends on what information is available, or granted.

BL: Right. So, I volunteer at the COVID Tracking Project, where we compile data from every state. And one major challenge we face is the huge differences in data definitions. Some states define their COVID case number as including confirmed and suspected cases, other states only include confirmed cases, some they don’t provide a definition at all. I’m curious about how the auditing framework addresses these kinds of different definitions.

KM: That’s one of the challenges. You’re faced with inconsistent data collection across all the public health departments, and that’s something we will review. We want to find out what is publicly available and what is not. And that also helps states compare with best practices. We’re just looking for a unified approach with the common metrics.

BL: Yeah. Ideally, every state would be using the same definition. But does every state have the data available to use the same definition? Some states just don’t track suspected cases.

KM: Correct. Well, I—they shall remain nameless, but they are participating, so you do want to watch this over the next few months. It was my understanding that one state isn’t even testing in nursing homes.

BL: Wow. I definitely will look out for that.

KM: Yeah. I was like, what? How do you do that? How is that possible? I’m not—making fun, I’m not poking blame. What I’m saying is, [we auditors want to look into] how each state reports their metrics and how they’re doing, so that we can determine best practices on our own.

BL: How does the auditing framework account for the fact that each state is facing different challenges because of its population or its geography? Like, I know Ohio has had major outbreaks in its prison populations, Florida had to shut down testing sites because of the hurricane recently. How is that accounted for in the fact that you’re trying to use the same framework in these different states?

KM: That’s a good question. I do know that one audit shop already spoke to going a little bit further. So, the auditing framework is flexible. We have our baseline, what we are all agreeing to ask. If they want to go further, they can.

Given the resource constraints of each auditor, we felt it was—we wanted to have something narrow enough that everyone could do in a timely fashion, and generic enough that everyone could do in a timely fashion. But if they wanted to go look further, they had the resources and time, it’s up to them.

BL: Do you have a prospective timeline for this? For Delaware specifically or in general, are there any requirements or timing goals?

KM: Yes. We have—obviously, like everyone else, we’re in the middle of several projects, many got held up… But this has become a priority. There's a team assigned, and they are moving forward. We’re hoping that, in the next three months, we can have something.

I know that a couple of other states have moved forward with this, and some have not been able to even begin the process of initiating this audit. The times are going to vary depending on the audit shops, and their audit plans, and their resources. And when I say “audit plan”—you usually have our audit plan ready by the time your next fiscal year is starting.

BL: I see. Within three months, that’s pretty good for such a thorough project.

KM: It’s aggressive. But we’re ready! It’s about acquiring information.

BL: And how will the results of the audit impact the states that are evaluated? In Delaware, or if you have any indication of what might happen in other places.

KM: The hope is that this template and subsequent state reviews validate efforts and certify the integrity of the data. And when it doesn’t, that those shortcomings can be quickly remedied. Again, we’re looking towards the future. Looking towards best practices. And I think this initiative will help policy-makers and help public health officials to better prepare by giving power to the data and knowledge. Truth and transparency.

BL: Power to the data!

KM: That’s right.

BL: That’s what we want! We want consistency, trust.

KM: We do. And we need transparency and accountability. People say they want it, right? So we’re gonna give it to them. And some states may have recommended changes to data collection based upon these audit results.

The office I took over was more of a “gotcha” office. “Ah, I gotcha, look what you did wrong.” And that’s not where I’m coming from. I’m coming from, partnerships, and collaboration, and there’s always room to improve. I don’t think there’s any penalty here, if any states fare poorly. We will be able to compare and contrast, and look at best practices, but we can all do better and work together to implement best practices for other people.

After our interview, I looked at the data audit template itself.

The template focuses on four key components: data collection, data quality, communication, and best practices. As State Auditor McGuiness explained, auditing teams may investigate additional questions around COVID-19 data, but any state which uses this framework needs to fill out all parts of the rubric so that results may be easily compared across states. (To anyone who has struggled through a COVID Tracking Project data entry shift, easily comparing across states sounds like a dream.)

The questions asked by this rubric fall into three main categories: collection, reporting, and monitoring. “Collection” questions ask which metrics the state tracked, including test types, case types, outcomes, hospitalization data, and demographic information. “Reporting” questions ask how state public health departments communicated with COVID-19 testing institutions and hospitals to gather data. “Monitoring” questions ask if states took certain steps to identify errors in their data, such as ensuring that COVID-19 test results were coded directly in reports from labs. This section also includes questions about contact tracing.

The questions forming this auditing framework, as well as its overarching goal of sharing best practices and restoring public trust in data, align well with the work of many reporters and researchers documenting the COVID-19 pandemic. However, I have to wonder if this template will seem insufficient before some state auditors even begin investigating. The template refers to only two types of tests, “COVID-19 tests” (which I assume means PCR tests) and “COVID-19 antibody tests.” Antigen tests are not mentioned, nor are any of the several other testing models on track to come on the market this fall. The template also fails to discuss data on any level smaller than a county, neglecting the intense need for district- or ZIP code-level data as schools begin reopening. And it only mentions demographic data—a priority for the many states where the pandemic has widened disparities—in one line.

Plus, just because state auditors can ask additional questions specific to local data issues they’ve seen doesn’t mean that they will. Speaking to State Auditor McGuiness made it clear to me that COVID-19 data decentralization in the U.S. is not going away any time soon. Every state can’t even evaluate its data reporting in the same way, because some auditors’ offices are bound by state legislatures or are unable to access their own states’ public health records.

I look forward to seeing the results of these state data audits and making some comparisons between the 20 states committed to taking part. But to truly understand the scope of U.S. data issues that took place during this pandemic and set up best practices for the future, we need more than independent state audits. We need national oversight and standards.

HHS hospitalization data: more questions arise

Last Tuesday, the post on COVID-19 hospitalization data that I cowrote with Rebecca Glassman was published on the COVID Tracking Project’s blog. We pointed out significant discrepancies between the Department of Health and Human Services (HHS)’s counts of currently hospitalized COVID-19 patients and counts from state public health departments. You can read the full post here, or check out the cliff notes in this thread:

That same day, the Wall Street Journal published an article on HHS’s estimates of hospital capacity in every state—which, as you may recall from my first newsletter issue, have been plagued with delays and errors. These hospital capacity estimates are based on the raw counts that Rebecca and I analyzed. It appears that errors in hospital reprots are causing errors in HHS’s raw data, which in turn makes it more difficult for HHS analysts to estimate the burden COVID-19 is currently placing on healthcare systems. When the CDC ran this dataset, estimates were updated multiple times a week; now, under the HHS, they are only updated once a week.

On Wednesday, the New York Times reported that 34 current and former members of a federal health advisory committee had sent a letter opposing the move of hospital data from the CDC to the HHS. These medical and public health experts cited new burdens for hospitals and transparency concerns as issues for HHS’s new data collection system. (The New York Times article references Rebecca’s and my blog post, which is pretty cool.)

In an earlier issue, I reported that several congressmembers had opened an investigation into TeleTracking, the company HHS contracted to build its new data collection system. Well, the New York Times reported on Friday that TeleTracking is refusing to answer congressmembers’ questions because the company signed a nondisclosure agreement.

And finally, HHS chief information officer José Arrieta resigned on Friday. I’m tempted to hop on the next bus to Pittsburgh and start banging on the door of TeleTracking’s headquarters if we don’t get answers soon.

What’s up with testing in Texas?

The COVID Tracking Project published a blog post this week in which three of our resident Texas experts, Conor Kelly, Judith Oppenheim, and Pat Kelly, describe a dramatic shift in Texas testing numbers which has taken place in the past two weeks.

On August 2, the number of tests reported by Texas’s Department of State Health Services (DSHS) began to plummet. The state went from a reported 60,000 tests per day at the end of July to about half that number by August 12. Conor, Judith, and Pat explain that this overall drop coincides with a drop in tests that DSHS classifies as “pending assessment,” meaning they have not yet been assigned to a county. Total tests reported by individual Texas counties, meanwhile, have continued to rise.

Although about 85,000 “pending assessment” tests were logged on August 13 to fill Texas’s backlog, this number does not fully add up to the total drop. For full transparency in Texas, DSHS needs to explain exactly how they define “pending assessment” tests, how tests are reclassified from “pending” to being logged in a particular county, and, if tests are ever removed from the “pending” category without reclassification, when and why that happens. As I mentioned in last week’s issue, DSHS has been known to remove Texans with positive antigen tests from their case count; they could be similarly removing antigen and antibody tests reported by counties from their test count.

If you live in Texas, have friends and family there, or are simply interested in data issues in one of the country’s biggest outbreak states, I highly recommend giving the full post a read. For more Texas test reporting, check out recent articles from Politico and the Texas Tribune.

Help advocate for better COVID-19 demographic data

The COVID Racial Data Tracker, a collaboration between the COVID Tracking Project and the Boston University Center for Antiracist Research, collects COVID-19 race and ethnicity data from 49 states and the District of Columbia. We compile national statistics and compare how different populations are being impacted across the country.

But there are a lot of gaps in our dataset. We can only report what the states report, and many states have issues: for example, 93% of cases in Texas do not have any reported demographic information, and West Virginia has not reported deaths by race since May 20.

A new form on the COVID Tracking Project website allows you to help us advocate for better quality data. Simply select your state, then use the contact information and suggested script to get in touch with your governor. States with specific data issues (such as Texas and West Virginia) have customized scripts explaining those problems.

If you try this out for your state, please use the bottom of the form to let us know how it went!

Featured data sources

Lost on the frontline: This week, Kaiser Health News and The Guardian released an interactive database honoring American healthcare workers who have died during the COVID-19 pandemic. 167 workers are included in the database so far, and hundreds more are under investigation. Reading the names and stories of these workers is a small way to remember those we have lost.

The Long-Term Care COVID Tracker, by the COVID Tracking Project: Residents of nursing homes, assisted living facilities, and other long-term care facilities account for 43% of COVID-19 deaths in the U.S. A new dataset from the COVID Tracking Project compiles these sobering numbers from state public health departments. You can currently explore a snapshot of the data as of August 6; the full dataset will be released this coming week.

Household Pulse Survey by the U.S. Census: From the end of April through the end of July, the U.S. Census ran a survey program to collect data on how the COVID-19 pandemic impacted the lives of American residents. The survey results include questions on education, employment, food security, health, and housing. I looked at the survey’s final release for a Stacker story; you can see a few statistics and charts from that story here:

Source callout

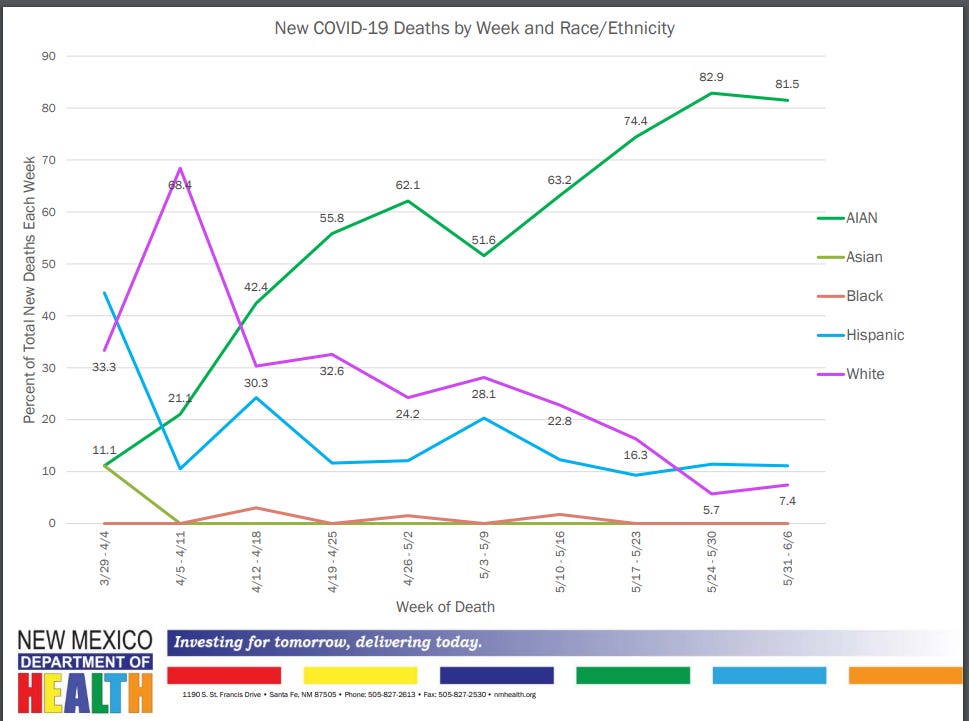

New Mexico reports COVID-19 death demographics in a way that makes me suspect they have it out for us at the COVID Racial Data Tracker specifically.

The state occasionally includes race and ethnicity information for deaths in its Modeling Updates, released once a week. I say “occasionally” because there is no rhyme or reason to when this key demographic information makes it into the update. And there is also no rhyme or reason to how these data are presented:

This chart is from the Modeling Update released on June 9. Yes, you’re reading it right: those are percentages, expressed in a line chart. Some of the points don’t even have data labels. Alice Goldfarb, our team lead for race data, called this “performance art.”

New Mexico’s newest Modeling Update, released this past Tuesday, has shown a slight improvement in the state’s data presentation: the percentages are now expressed in bar charts, and total deaths for each racial group are included below the graph. (See page 20 of the PDF.) Still, in order to present a complete picture of how COVID-19 is impacting minorities in New Mexico, the state must release these data regularly and include precise figures.

More recommended reading

My recent Stacker bylines

More news from the COVID Tracking Project

Counting COVID-19 Tests: How States Do It, How We Do It, and What’s Changing

Tests, Cases, and Hospitalizations Keep Dropping: This Week in COVID-19 Data, Aug 13

Bonus

New York’s true nursing home death toll cloaked in secrecy (Associated Press)

Coronavirus Cases Are Surging. The Contact Tracing Workforce Is Not (NPR)

That’s all for today! I’ll be back next week with more data news.

If you’d like to share this young newsletter further, you can do so here:

And if you have any feedback for me—or if you want to ask me your COVID-19 data questions—you can send me an email (betsyladyzhets@gmail.com) or comment on this post directly: